Guest article by Konradin Metze

Journal of Unsolved Questions, 2, 2, Preface, XV-XVII, 2012

[download id=”41″]

Konradin Metze , MD, PhD, pathologist, is leader of the research group analytical cellular pathology, member of the National Institute of Science and Techonology on Photonics Applied to Cell Biology (INFABIC) , professor at the postgraduate courses of Medical Pathophysiology and Medical Sciences at the University of Campinas, Brazil and academic editor of the scientific electronic journal Plos One.

Konradin Metze , MD, PhD, pathologist, is leader of the research group analytical cellular pathology, member of the National Institute of Science and Techonology on Photonics Applied to Cell Biology (INFABIC) , professor at the postgraduate courses of Medical Pathophysiology and Medical Sciences at the University of Campinas, Brazil and academic editor of the scientific electronic journal Plos One.

e-mail: kmetze at fcm.unicamp.br

The evaluation of science is currently a highly debated matter at universities and research institutions, in scientific journals, and also in the media in general. Researchers want to produce science of high impact. The aim of this essay is to make some critical reflections on the impact of science and especially its evaluation.

First, we have to define the concept of impact of science. It is necessary to think about who or what will be influenced by science. According to this question we can stratify impact in science in four types:

1.The intellectual impact, as the degree of changes of scientific concepts caused by the development or improvement of theories or hypotheses based on observations or theoretical reflections.

2. The social impact, as the degree of changes in life or environment of individuals or groups of people caused by scientific theories or hypotheses.

3. The financial impact as the degree of economic changes of “corporations” supporting scientific activity, such as companies, universities, or governmental departments due to the activity of scientists.

4. The media impact as the degree of the presence of research or researchers in the media.

A strong intellectual and social dimension of science has always been present. Its financial and media impact, however, got an increasing importance in the last decades.

Regarding the question of measurement, the financial impact can easily be defined as a variable proportional to the money spent for research or earned by patents, newly developed products etc. Financial impact includes nowadays also changes of share values at the stock exchange due to new inventions or product recalls (for instance pharmaceutical drugs). We are also able to estimate the media impact in a relatively easy way, for instance by quantifying the number or extent of reports on scientific discoveries or research groups in the lay media or by public opinion research.

Whereas the measurement of the financial and media impact is to some degree easy, this is not true for the intellectual and social impact, since this cannot be done in a direct way. For this purpose we have to look for “substitute variables” (proxys), which can give only rough estimates in an indirect manner. The lack of a generally accepted way of measurement provokes a continuous broad discussion, of course.

One of the main problems is that an impact can only be seen from a historical point of view, that is, we need some observation time in order to know how the community was influenced by a publication, if this ever happened. For the intellectual impact, the method to count only the number of publications of a researcher, unfortunately still in use, but must be considered inadequate, because it does not measure the reaction of the community.

A better proxy for the intellectual impact is the number of citations in other scientific contributions to the previous paper. This concept was introduced by E. Garfield in the sixties of the last century. Today there are several data sources, for instance Web of Science, produced by Thomson-Reuters. There we can find citations to scientific contributions published as early as 1898 within a selected pool of journals, with about 8000 journals in the Science Edition and about 2700 journals in the Social Sciences edition of 2010. Books and proceedings are becoming to be included recently. Citation counts cited in this essay come from this data source. A similar service is offered by SCOPUS (Elsevier), where the screening for citations of a paper is done in a considerably larger pool of periodicals. This system, however, includes only citations from 1996 on. A freely available, web-based program created by Harzing, lists citations of former publications in web sites [1].

The main question is, whether we can consider the number of citations as a reliable estimate of the intellectual impact of a publication. Generally, the majority of the researchers believes in this. Without any doubt it is better to use the number of citations to a publication than only the impact factor of the journal, where it was published,

The impact factor of a journal is somehow an estimate of the “mean citedness” of an article in this periodical [2]. Its uncritical use for the evaluation of individual manuscripts, single researchers or reseach groups is detrimental to science, because a vicious circle between bureaucrats, researchers, editors, and the impact factor itself will be created [2] . Furthermore, from the point of view of scientific methodology it is nonsense, to use the proxy of a proxy in order to measure something. Therefore for the evaluation of the intellectual impact the number of citations to a work under discussion is without any doubt better than the use of the impact factor. Some criitical remarks have to be done, however. It is well known that pure methodological papers or technical notes, which do not create or modify hypotheses or theories, may get very high citation counts. Here are some examples: A meeting abstract written by Karnovsk [3], with a short description of a fixative for electron microscopy was cited 7470 times since 1965. A method for quantifying proteins, described by Lowry and co-workers [4] , has been cited 299.360 times since 1951. An interesting phenomenon was caused by a publication in a crystallography journal in 2008. In this review paper [5], G.Sheldrick described a computer program for the analysis of molecular structures. Furthermore, a link to its open internet access was given, and the phrase added: “This paper could serve as a general literature citation when one or more of the open-source SHELX programs … are employed in the course of a crystal-structure determination.” In about four and a half years after the publication the paper accumulated 26.660 citations. In this case, the citations can be seen as a kind of payment of the free use of a computer program for scientific analysis. Since the beginning of 2009, all manuscripts accepted by the International Journal of Cardiology must contain a citation to an article on ethical authorship [6] written by the editor in the same journal in January 2009. Up to the present date 1976 citations can be counted.

In contrast to that, we can demonstrate that highly relevant, revolutionary and paradigm changing publications may have relatively low citation counts. Einstein was honored with the Nobel prize in physics for his work on the photoelectric effect, but his publications on this topic were rarely cited. His main publication on the photoelectric effect from 1905 [7] got 695 citations, which is equivalent to a mean of less than 7 citations per year. Only 89 citations to a subsequent paper on radiation [8] can be found in Web of Knowledge. Georges Lemaitre, a theoretical cosmologist, created the theory of the expansion of the universe, which is also called the “big bang theory”. In 1927, he published his principal ideas in a paper in French, which was cited only 177 times (including 21 erroneous citations)[9]. Four years later he summarized his theory in a communication to Nature [10]. According to Web of Science there are only 24 correct citations, (and additional 21 incorrect ones) to this paper. Finally the revolutionary description of the DNA structure by Watson and Crick [11] has been cited 4065 times since 1953. In other words, there are less citations to the first description of the DNA helix than to Karnovsk’s abstract with a short description of a fixative solution.

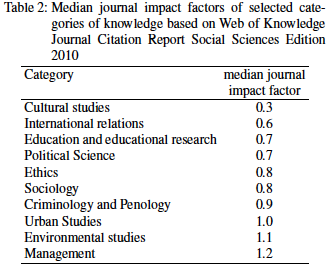

Citations are mainly found in papers published in the same area of knowledge or an adjacent field. If the community of researchers is large and very active, the chance of citations of a paper published in the same field is increasing. This can be easily seen when we compare the impact factors of journals of different subject areas. Thomson-Reuters groups journals together according to their fields of knowledge. Table 1 and 2 show the median values of the impact factors of some selected categories. Looking at these data, it is obvious that the probability of a publication from mathematics to get cited is considerably inferior to that of a paper from medicine and that the curriculum of an “average” tissue engineer or molecular biologist will probably contain more citations to his papers than that of a world class mathematician. Therefore, different scientific areas should never be compared by the number of citations to their publications.

This is sometimes also true inside a scientific discipline. The average citations to papers in the field of tropical medicine are much lower than that in oncology or cardiovascular medicine. How can we interpret these data? One main reason is that there are less researchers who would potentially cite an article in the field of tropical medicine, than researchers working in the field of oncology or cardiovascular medicine. Moreover, companies from the pharmaceutical industry are generally not interested in developing new drugs against tropical diseases for economical reasons. In this case the lack of economic impact reflects negatively on the development of science and the increase of intellectual impact. The example of the “neglected diseases” illustrates well the existence of important conflicts between the intellectual, social and financial impact of science.

University and governmental bureaucrats might be seduced to misuse the citation numbers of the work of research groups in an uncritical way for the decisions on the distribution of support. As an example, the personal and financial resources for mathematics or history might be reduced and transferred to molecular biology, tissue engineering and other new technologies. Unfortunately, this just happens all over the world with increasing frequency. The consequences will be disastrous on the long run. A vicious circle may be created: some scientific disciplines, the strongest ones, will drain more resources, get more researchers and in consequence produce more papers. This increases the number of citations to their work and the impact factor of the journals where they publish, and thus the possibility to get new resources. In that way, smaller scientific disciplines might collapse. The ecosystem university, with its plurality of thinking will loose some of their species. Academic life will be more monotonous, but this is not the main problem. We will be unable to reply to the challenge of the social impact on the long run. Science will not be prepared to face relevant problems of mankind in an adequate way and to develop solutions in time. The world population is still increasing, natural resources such as clean water or food are getting scarce. Environmental pollution and global warming continue to be unresolved problems. Many social, ethnic and religious conflicts generate violence. Therefore, the study of culture, criminology, political sciences, international relations, water resources and food science will probably get increasing importance in the future. If anyone would only look at the impact factors as demonstrated in table 1and 2 , certainly these areas of knowledge would not get priority at the universities. This would be a fatal error for the society.

In summary, although the measurement of the intellectual impact of science by counting citations to publications seems to be the best proxy available at the moment, this procedure should be seen with great caution. For a global evaluation of science its social impact must be evaluated together with the intellectual one.

Literature

1. Harzing A. Publish or perish at http://www.harzing.com/pop.htm last access 01 of june 2012

2. Metze, K. Bureaucrats, researchers, editors, and the impact factor: a vicious circle that is detrimental to science. Clinics, 65, (10), 937-940. 2010,

free at : http://www.scielo.br/scielo.php?script=sci_arttext&pid=S1807-59322010001000002 last access 01 of june 2012

3. Karnovsk.MJ A formaldehyde-glutaraldehyde fixative of high osmolality for use in electron microscopy Journal of Cell Biology 27: A137- 137, 1965 ;

4. Lowry OH, Rosebrough NJ, Farr AL, et al. . Protein measurement with the folin phenol reagent. Journal of Biological Chemistry 193: 265-275, 1951.

5. Sheldrick GM. A short history of SHELX. Acta Crystallogr A. 64: 112-122, 2008.

6. Coats AJS. Ethical authorship and publishing. Int J Cardiol. 2009;131: 149-50.

7. Einstein A. Ueber einen die Erzeugung und Verwandlung des Lichtes betreffenden heuristischen Gesichtspunkt [Generation and conversion of light with regard to a heuristic point of view.] Annalen der Physik 17 :132-148, 1905

8. Einstein A. Ueber die Entwicklung unserer Anschauungen Ueber das Wesen und die Konstitution der Strahlung [On the evolution of our vision on the nature and constitution of radiation] Physikalische Zeitschrift 10 : 817-826, 1909

10. Lemaitre G. Un univers homogene de masse constante et de rayon croissant rendant compte de la vitesse radiale des nebuleuses extragalactiques. [A homogeneous Universe of constant mass and growing radius accounting for the radial velocity of extragalactic nebulae] Annales de la Societe Scientifique de Bruxelles

11. Watson JD, Crick FHC. Molecular structure of nucleic acids – a structure for deoxyribose nucleic acid. Nature 171: 737-738, 1953